Hallucinating accurately, part two: The gist of Predictive Processing

Concluding with an Afterword on Diego Velazquez's Las Meninas

Let’s continue discussing The Experience Machine, by Andy Clark. My last post, Hallucinating accurately: What should psychiatry make of Predictive Processing, was a preamble on reconciling ourselves to counterintuitive scientific theories. Now, let’s take a stab at understanding the gist of Predictive Processing Theory before we get around to the promised topic of how it may be relevant to psychiatry. Or generally to the professions whose job it is to intervene when interpreting the world and behaving have gone awry.

One of the brain’s main tasks is turning the barrage of signals that arises as we bump against the world into information. For us animals - large-ish living things that move about - three types of perception are essential: Exteroception, which involves perceiving the world “out there” through senses such as sight and hearing; Proprioception, which concerns the kinematics of the body, including muscle activity and joint positioning; and Interoception, which keeps the brain informed about the body's physiological states and the condition of its viscera.

I’ll limit myself to predictive processing’s account of visual perception, keeping in mind that the theory encompasses much more. In fact one of its selling points is that it proposes a unified computational process for perception and action. But to avoid biting off more than I can chew, let’s focus on visual, exteroceptive perception.

Another limitation to keep in mind is that this exposition is by a psychiatrist trying to understand its implications. You could of course have a know-it-all AI chatbot summarize the theory for you, but the quirks and struggles of a human trying to wrap his head around it still has advantages.

So what’s so difficult about perception?

The big idea of the entire book can be summarized in one sentence, adapted from the introduction: Our brains are prediction machines that generate experience from blends of expectation (predictions) and sensory evidence. Experience is not passively “absorbed” via a one-way uptake. Rather the brain is constantly, actively proposing the meaning of what the senses are jabbering about

Why do we need the complication of prediction in order to perceive the world around us? After all, in order to predict, one needs a store of experiences for predictions to be based upon. What a costly rigmarole it must be to maintain such a storehouse! Why not say that when we look at an apple it looks like an apple and we’re done? This is what our immediate, intuitive, lived experience - the “manifest image” - tells us.

Well, consider a few of the challenges of perceptual discrimination versus generalization when it comes to perceiving a particular apple. Many of its features overlap with those of other entities. On the other hand, the light reflected from this apple in this light from this angle does not overlap any apple-images one may have seen before. In addition to this challenge of discriminating despite similarity and generalizing despite difference, there is also the “high dimensionality” of the information our sensory organs must process. “High dimensional” refers to the fact that the information varies in a myriad ways - in different “dimensions”. To make things more difficult, the input is not static. The apple one makes sense of at time t should maintain its identity at time t+1, even when the activation of light receptors in the retina is completely scrambled by every tiny movement of the head or the saccades of the extra-ocular muscles.

The job of the brain in this scenario is: Given this overlapping, multidimensional, evanescent, slippery barrage, to infer what are the causes of the current stimuli. Think of the enormity of this challenge as the foundational rationale for why predictive processing makes sense: deploying what is already known - learned statistical patterns as predictions - to make making sense of sensations a tractable problem.

Finding patterns one by one

The starting point is that the aforementioned sensory “barrage”, despite being unspecific, multidimensional, and evanescent, is not just noise; it enfolds detectable patterns. The first order of business is detecting them, abstracting them, then, hierarchically, constructing increasingly abstract patterns.

One of the most thoroughly understood examples of hierarchical processing in the brain occurs in visual perception. Visual processing begins in the retina, the brain's most distant outpost. From there, partially processed information travels through fat-as-drinking-straws optic nerves to the lateral geniculate nucleus, and then to the primary visual cortex (V1) located at the back of the brain. The process continues as information moves forward to higher visual areas, including secondary visual cortex and associative areas such as the inferotemporal cortex.

So far this account of vision matches the old paradigm of feedforward processing, where what is to become experience is assembled in one direction by progressively abstract filters ( edges, textures, shapes, objects…). Simpler tasks are computed first (are “lower”, or closer to the sense organs) then passed on to successively higher levels.

But there is a fact about how the brain is “wired up” that suggests the information flow does not just travel up a one-way street: it exhibits an abundance of “top-down wiring”. Connections from parts of the brain distal to the sensory organs aim "downwards" towards parts of the brain closer to "the outside world". Clark says that the number of such backwards connections may exceed the number of forward connections “by a very substantial margin, in some places by as much as four to one..." Whatever theory we come up with about how the brain works must account for all that reverse traffic.

Predictions: “You’ve seen this before, I bet it’s this…”

At this point you may wonder if this arrangement gets the cart before the horse. What could “higher-up” brain centers contribute that’s worth sending “down”? The answer is guesswork. At various level of processing, a “generative model” generates ready-made chunks of interpretations - predictions, something like bets that assuming what’s “out there now” is close enough to what was what was learned in the past.

These predictions are matched with the feedforward flow from the senses. Lower levels deal with concrete, sensory-specific data (like edges and colors in vision), while higher levels handle increasingly abstract information like recognizing objects. Putting intentional language on it, it’s as if the processing went something like: “I bet that’s an edge - I’ll be that color is red, even though its luminance and hue have changed now that it is in a shadow- I’ll bet that’s a roughly spherical object the size of something I could hold in my hands… I’ll bet that’s an apple”.

But what is the guesswork based on? Past experience - the statistical regularities that have held up time and time again. In addition to learned experience, it’s possible that some utterly fundamental, or “high-level priors”, may be innately “hard-wired”. Just speculation, but notice how such utter fundamentals of experiencing sound like Kant’s “Categories” and “Forms of Intuition”.

Prediction errors: “You’ve seen this before…but, well, not exactly.”

Because predictions are guesses, they are mere sketches that can’t match the sensory signal exactly. Patterns in the world do recur, but every situation is new in some way, so predictions will be rough, occasionally completely off. When there is a discrepancy, prediction errors are generated, signals that are key components of the process. Notice that the word “error” in this context does not connote malfunctioning: Prediction errors are perfectly good messages about how predictions and sensory information compare. Prediction errors inform the system by traveling “upwards” to update the world model, tweaking it to match what is the case now. One could say that prediction errors are what keep us from “hallucinating inaccurately".

Of course, predictions cannot be “issued” without some idea of what the occasion calls for. They must be triggered by some early, sketchy “upstream information”.

It might seem to you (it did to me) that such a system seems inefficient in its back-and-forth loops. But a metaphor proposed by Clark suggests how it might in fact be more efficient than straight-up feedforward processing. Consider a large company that has multiple levels of management and employees who work at different tiers. In such a company, it would be inefficient to report routine, predictable events to the CEO. As long as what happens at lower tiers is what upper tiers expect (“predict”), nothing more needs to be done. Only surprises, that is, mismatches from what is anticipated are newsworthy and merit being sent up the management ladder. Those mismatches are analogous to prediction errors in predictive processing.

The notion that predictive coding is efficient does not depend on simple metaphors like the one above. Indeed, there is evidence - at least based on artificial neural networks- that it “naturally emerges as a simple consequence of energy efficiency”, supporting the notion that brains too should “continuously inhibit… predictable sensory input, sparing computational resources for input that promises high information gain.” (see Predictive coding is a consequence of energy efficiency in recurrent neural networks) 1

The brain as a generative “Large World Model”

But where is this storehouse of “predictions”? Recall from my last post that the “Good Regulator Principle” (Conant and Ashby's Good Regulator Principle) proved that good regulators must implement a model of the world. If this is true. so should brains! Luckily in the era of in-our-face generative AI, we are familiar with generative models (Large Language Models or image generators like DALL-E) that whip up coherent text and images based on the statistical regularities they“picked up” from the world. “What I cannot create, I do not understand”, said Richard Feynman. Perhaps now that, one-quarter of the way thru the 21st century, we have created generative artificial intelligence, we understand a bit more about how the brain works, as we experience LLM-generated conversations and DALL-E and Sora generated visual environments.

Precision weights: “Trustworthy! Important!… Dubious… Not important…”

So far we have built up a system that has no allowance for the uncertainty and noise of the world, or for dialing up the importance of some signals. So here the theory introduces the notion of precision weights that alter the relative impact of sensory stimulation, predictions and prediction errors.

We are encountering terms again whose meaning differs from the ordinary. Here, “precision” refers to the degree of confidence or reliability attributed to an informational state. Precision, in this technical sense, is defined as the inverse of uncertainty. What’s more, the seemingly fuzzy notion of “uncertainty” has a quantitatively defined meaning as the inverse of the variance associated with a particular sensory input or prediction.

Introducing something like “adjustments” (weighting) may sound like finagling. Is it valid to just whip up an ad hoc “correction” to make it work? Saying that the brain “adjusts” things according to their “significance” or “uncertainty” is… very convenient!

Although we, the laity, must accept theoretical constructs like this on faith, there is an understandable logic precision weighting. It’s not just some ad hoc add-on to patch up the theory. The concept of precision weights stems from principled reasoning about how biological systems encode uncertainty in their sensory inputs and predictions. And there is experimental evidence that the certainty vs. uncertainty of stimuli biases the brain’s decision making. For a peek at the complications of theory and neurophysiological evidence, see: Cerebral hierarchies: predictive processing, precision and the pulvinar 2

So formalized in statistical terms, the addition of precision weighting sounds less “hand-wavy” and more like something a brain could accomplish without a homunculus dictating what’s certain or uncertain. No little-man-in-the-brain is necessary because “confidence” and “uncertainty”, in this context, do not refer to conscious feelings (as in “feeling confident”) but are terms in the realm of statistics.

So again, at the abstract level of computation, precision is a weighting factor that can amplify or dampen aspects of information processing. Precision weights determine the influence of each source of information on the perceptual inference process. If sensory input is deemed precise (reliable, or less uncertain), it will have a greater impact on shaping the brain's predictions and vice versa.

Attending to what’s important

As described so far, predictive processing would be good enough for a robot, but not for living things which have interests in surviving, flourishing, and reproducing. That’s because even “low quality” information should not always be dismissed just because it is uncertain, if it alerts us to states of affair that urgently matter. Never mind that some concurrent, high-precision, clear-as-a-bell sensory stream competes with it, if it is of no consequence, there is no need to dwell on it. Therefore, the theory must account for occasions when noisy, high-variance information “makes it all the way to the top”, contributing to behavior and updating internal models.

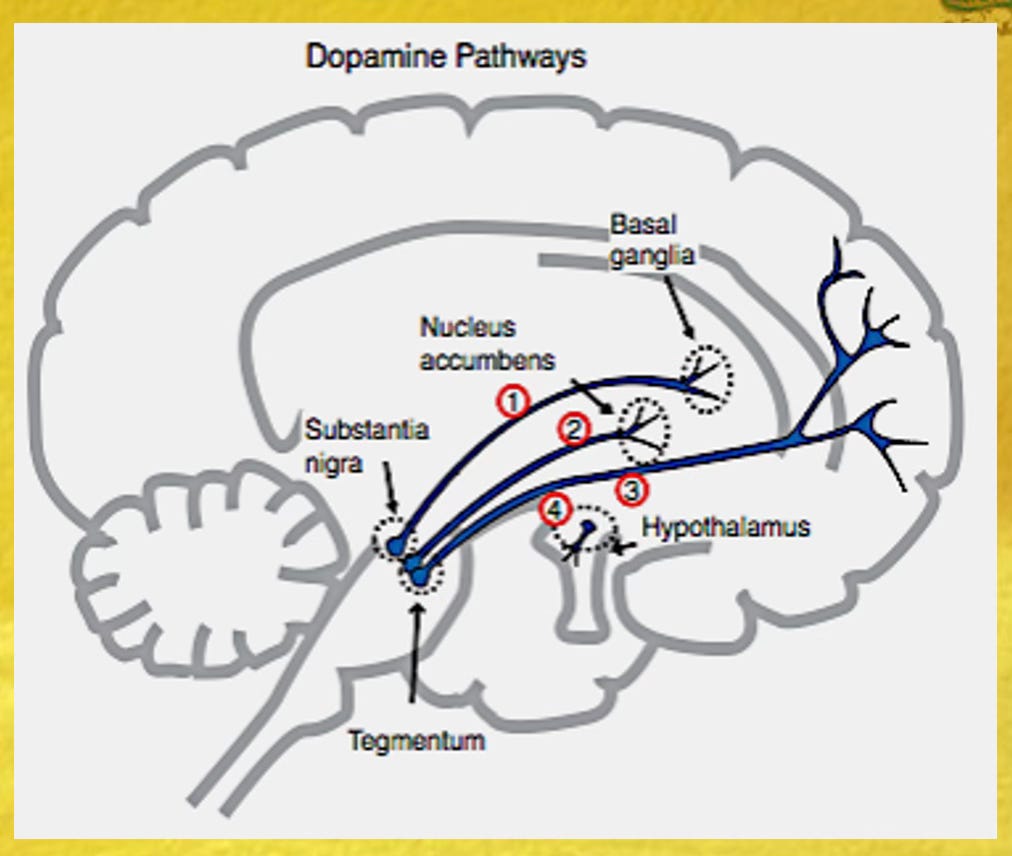

What kind of information is important to pay attention to, even if it is statistically uncertain? It’s whatever our emotional valence systems tag as good or bad. So the theory includes a way to elevate the “precision” of signals that have high emotional valence. How is this accomplished at the level of neurophysiology? Presumably, valence-related precision weighting involves tweaking information via the famous neuromodulating neurotransmitters, serotonin, noradrenaline, and dopamine.

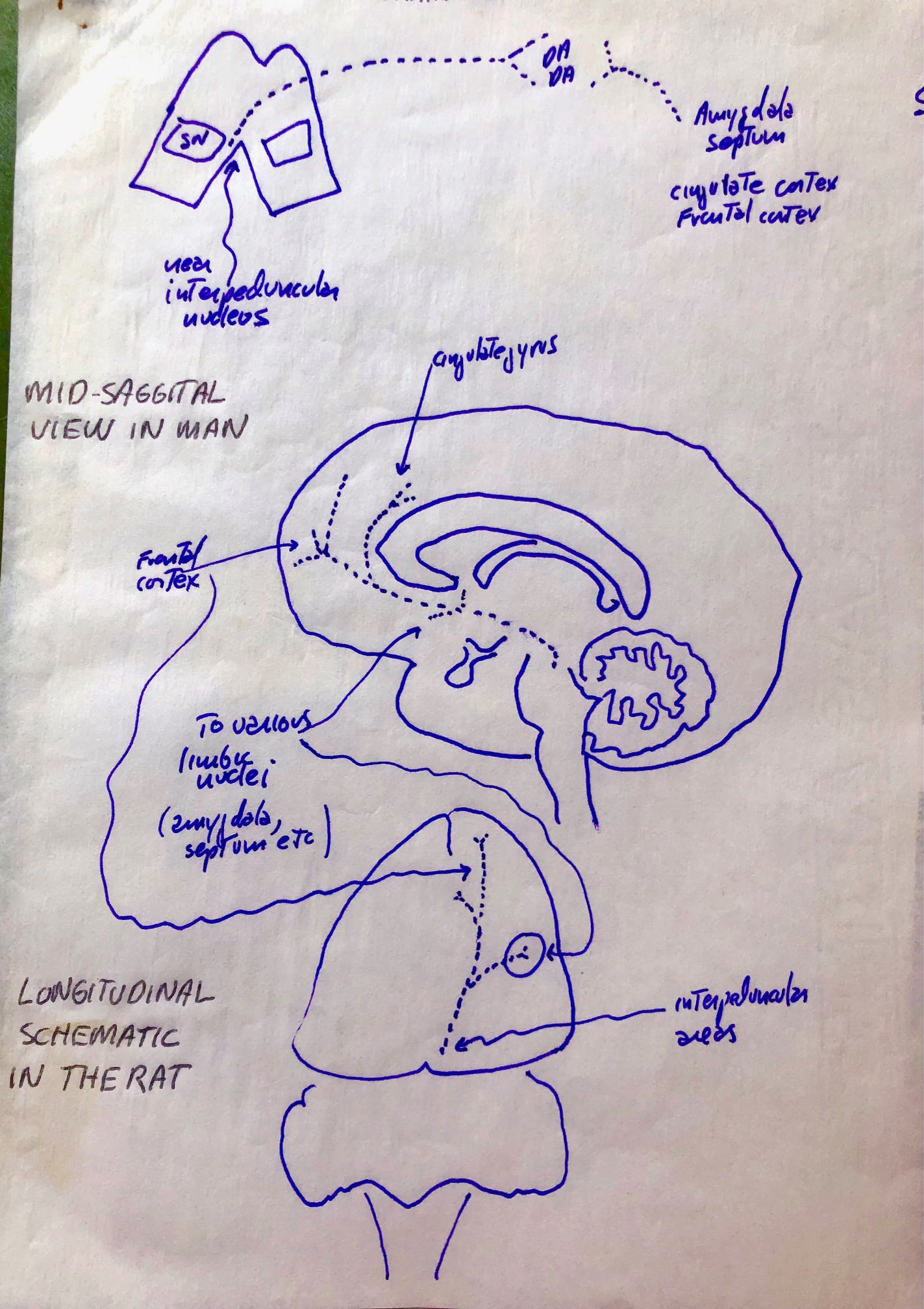

Invoking these three neurotransmitters bridges this theory to something psychiatrists are familiar with, namely the dopaminergic, noradrenergic and serotonergic tracts that fan out from the midbrain to all levels of the brain. These tracts have been known for a long time; what’s new is ascribing to them the specific function of modulating neuronal activity as part of predictive coding.

Contrary to popular notions that these famous neurotransmitters (especially dopamine) are “feel good” substances that bathe the brain like a shot of rum, they are in fact components of precisely targeted “wiring systems” that modulate the activity of specific neuronal populations. For example, here is a schematic diagram of the dopamine pathways:

I mentioned that these tracts have been known for a long time. How long ? Well, here’s a diagram I found while throwing out papers that date back to my psychiatry residency in the mid 1980’s:

Precision weights and psychopathology

In my next post I’ll say more about how predictive processing theory has the potential to inform clinical work. For now let’s just recap and view this possibility from afar.

Predictive processing explains that we make sense of the world based on four dynamic informational processes: 1) predictions, which are compared with 2) ongoing sensory data, which gives rise to 3) prediction errors when predictions and sensory information mismatch, and lastly 4) precision weighting, which adjusts the balance of all these elements based on their reliability and significance. Thus, predictions may have their “stickiness” or their “insistence”. When there is a mismatch between the two, the very signal of that mismatch, that is, prediction error can itself be adjusted for its impact - made “louder”, softer, even dismissed.

If we agree that this is how the mind works, what happens if we turn the dials on the four processes? Lo and behold, we generate hypotheses that may explain neurodiversity, psychopathology, and personality differences. For example, one can imagine “bugs” such as positive feedback circularities. Imagine that precision weighting has bestowed excessive “certainty” on some prediction that does not match sensory information all that well. No matter, whatever was predicted will be experienced. This spuriously confirms the prediction/expectation, increasing the very likelihood of a dysfunctional take on the world happening again. For example, the minimal prick of a needle, predicted to be very painful, will hurt disproportionately, confirming the prediction and entrenching, say, a phobia of needles.

The efficiency of predictive processing comes with a heavy price: It abandons us to flounder dangerously close to maelstroms of mental suffering which can suck us in: Persecutory delusions, panic attacks, social anxiety, phobias, searing depressive cognitions that are impervious to reason. Perhaps we have found the materialist basis for the Wheel of Karma - just another speculation, of course…

We will come back the idea that having a brain that works this way leaves us vulnerable to dysfunction and suffering. But understanding these vulnerabilities also promises ways we might avoid, cure, or mitigate suffering. That’s for my next post.

Should we sign on the dotted line?

Predictive processing seems rather a counterintuitive, roundabout way to explain our experience because we grow into the immediacy of becoming conscious. Yes, the phenomenology of our experience is… what it is. But its immediacy and simplicity is an illusion. We need a refreshingly counter-intuitive theory, and here we have one. It sits nicely at an intermediate level of abstraction, “above” neurophysiological entities such as neurons and circuits, while “below” the phenomenology of perception. Beyond visual perception, the larger predictive processing story suggests hypotheses to explain aspects of psychopathology. Finally, predictive processing theory is enticingly intertwined with a Grand Unified Theory of the brain - and life itself, The Free Energy Principle.

I for one would like to sign on the dotted line, but we should keep an open mind. There is some neuroscientific evidence supporting the theory. You will have noticed that I have not attempted to review what evidence, such as it is, supports this theory. I deemed this, as they say, beyond the scope of my essay. But I do owe you at least a reference to some reviews, if you’ d like to pursue the thread: 1) Sensory Processing and the Rubber Hand Illusion 2) Perceiving is believing: A Bayesian approach to explaining the positive symptoms of schizophrenia 3 3) A Bayesian account of 'hysteria' 4

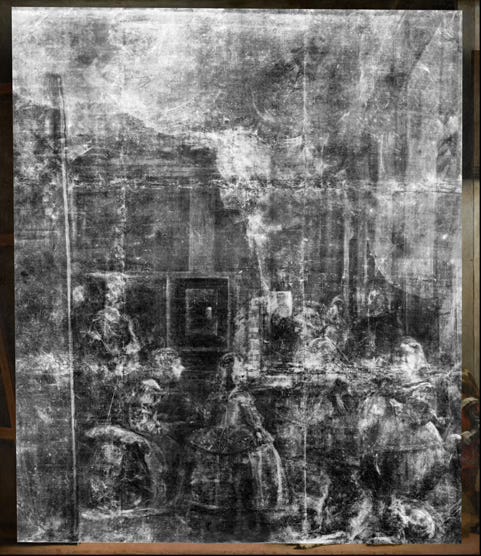

Afterword: Velázquez depicting depiction

After so many words, words and words, after so much labor for our brains’ left hemisphere and its language centers, I thought it would be refreshing to conclude with aesthetic pleasure for our right brain and pure visual pleasures.

So I needed a compelling image. But which one? I did not have to ponder long; the answer came to me immediately: I should conclude with a reproduction of Las Meninas, Diego Velázquez’s famous masterpiece (scroll to the end to see it). Never mind that it has nothing to do with predictive processing per se. It’s an inexhaustible playground for vision, engaging, tickling, feinting, tricking, seducing all levels of our brains visual hierarchical processing.

We can start to enjoy it as a narrative. What is happening in the picture? Well, we see the Spanish royal family as it was in 1656. A five year old blonde girl, the daughter of the king and queen, lights up the center of the canvas. She is attended by an entourage of meninas (ladies-in-waiting), one of whom offers the princess water from a little red pitcher. The princess appears to have been entertained by two court dwarfs (shown on the right), one of whom is impishly teasing the family dog, a majestic mastiff who’s snoozing in the foreground. The little girl is protected by a chaperone and a guardian, who are chatting in the shadowy background.

But something has just happened that has turned the gaze of the princess towards us, which has also caught the attention of one of the dwarfs. What? King Philip and Queen Mariana have entered “the room”. What room? It must be the same room we are in, for the painted personages are looking at us, in front of the canvas. How do we know it’s the king and queen? Because they (especially the king) are recognizable, and seen reflected the mirror on the back wall of the room.

That’s not all. Velázquez has had the chutzpah to depict himself in the family portrait! Presumably, he got along very well with the admiring king, so his effrontery was graciously tolerated. It’s said, in fact, that Las Meninas became King Philip’s favorite painting.

Finally, what was Velázquez painting on that huge canvas? What’s depicted on the other side? We shall never know. Debates rage on what it might have been: A portrait of the king and queen? After all, they are reflected in the back mirror, as if posing for a portrait. But no such portrait of the royal couple exists. Or was Velázquez painting Las Meninas itself, depicting himself depicting? If so, the painting depicts itself, or rather, just the back of itself…

I first saw Las Meninas in 1968 when I was ten years old. Although it is now displayed in a large gallery in the Prado Museum, in the 1960’s it was displayed solo in a small room. This room had a north-facing window that allowed soft daylight to bathe the canvas, light that aligned with the illusionistic light of the painting. There was a mirror hung on the wall opposite the painting, so that patrons could see the alternative universe brought to life by Velazquez without the limiting frame of the canvas.

Some writers and artists were in favor of all this stage setting, but some condemned it (especially the mirror) as a case of gilding the lily, a tasteless, intrusive apparatus.5 The controversy ended when the canvas was relocated to a large windowless (and mirror-less) gallery, which is where you’ll see it today when you make your recommended, at-least-once-in-a-lifetime pilgrimage to see it.

I can understand the sentiments of those who found the mirror, the window and the dedicated room to be in bad taste. But I’m with those who were moved by seeing Las Meninas in that intimate space. People would say that entering it was like entering a chapel, and emerged from it wiping tears from their eyes. A numinous presence was felt in the precinct… what was it? It was the Goddess of Vision! Paradoxically, she is invisible, so as not to get in the way of the light! She humbled the pilgrims, awakening them to the realization that they were endowed with vision, and that they had the supreme fortune of having been brought into Being in a light-filled universe that is there for the seeing.

Odds and ends

A lecture on YouTube: Andy Clark on "Predicting Peace: An end to the representation wars?"

There is some interest in predictive processing at UCSF; here are two articles by UCSF faculty. By Edward Chang et al:

Press release: To Appreciate Music, the Human Brain Listens and Learns to Predict; Article: Encoding of melody in the human auditory cortex

A major article by Robin Carhart-Harris and Karl Friston about predictive processing and psychedelics:

Predictive coding is a consequence of energy efficiency in recurrent neural networks. Ali, A., Ahmad, N., de Groot, E., van Gerven, M. A. J., & Kietzmann, T. C. (2022). Predictive coding is a consequence of energy efficiency in recurrent neural networks. Patterns, 3(12).

Kanai Ryota, Komura Yutaka, Shipp Stewart and Friston Karl 2015Cerebral hierarchies: predictive processing, precision and the pulvinarPhil. Trans. R. Soc. B37020140169

Fletcher, P., Frith, C. Perceiving is believing: a Bayesian approach to explaining the positive symptoms of schizophrenia. Nat Rev Neurosci 10, 48–58 (2009). https://doi.org/10.1038/nrn2536

Mark J. Edwards, Rick A. Adams, Harriet Brown, Isabel Pareés, Karl J. Friston, A Bayesian account of ‘hysteria’, Brain, Volume 135, Issue 11, November 2012, Pages 3495–3512, https://doi.org/10.1093/brain/aws129

Excerpt from a colloquium sponsored by Museo del Prado: https://youtube.com/clip/Ugkxqb3FwN0n2CMZ9JW3s0-Pd9dQ-5Hoqxmj?si=umqkM4WdBlZKthri (in Spanish)